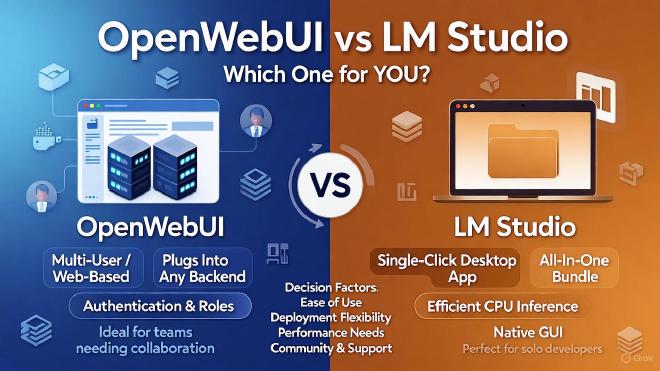

Both OpenWebUI and LM Studio are popular ways to run LLMs on your own machine, but they solve slightly different problems and have distinct strengths. Below is a side‑by‑side comparison to help you decide which one fits your workflow best.

1. What They Are #

| Tool | Primary Goal | Typical Use‑Case |

|---|---|---|

| OpenWebUI | A lightweight, self‑hosted web UI for interacting with any locally‑run model (or remote API). | Quick chat‑style UI, multi‑user access, easy sharing of prompts, and integration with existing inference back‑ends (e.g., Ollama, vLLM, Text Generation Inference). |

| LM Studio | An all‑in‑one desktop application that bundles model download, quantisation, inference engine, and a UI. | One‑click setup for developers, researchers, or hobbyists who want to experiment with many models without fiddling with separate servers. |

2. Architecture & Deployment #

| Feature | OpenWebUI | LM Studio |

|---|---|---|

| Installation | Docker (recommended) or manual Python install. Runs as a server you can expose on a LAN or the internet. | Stand‑alone binary (Windows/macOS/Linux). Runs locally as a desktop app; no Docker needed. |

| Backend Engine | Does not ship its own inference engine – you point it at an existing server (Ollama, vLLM, Text Generation Inference, FastChat, etc.). | Ships its own in‑process inference engine (based on llama.cpp for GGUF, ctransformers, or torch for full‑precision). |

| Model Management | You manage models separately (e.g., via Ollama, HuggingFace transformers, or a custom server). | LM Studio can download, convert, quantise, and cache models automatically from Hugging Face. |

| Multi‑User / Auth | Built‑in user accounts, role‑based permissions, API keys, and optional OAuth. Good for small teams or public demos. | Single‑user desktop app; no multi‑user auth (though you can expose its API locally if you want). |

| API Exposure | Provides a REST API (/v1/chat/completions compatible with OpenAI) that other tools can call. | Also offers an OpenAI‑compatible API, but it’s meant for local scripts rather than external services. |

| Resource Footprint | Very small (just the UI + proxy). The heavy lifting is done by the inference server you already run. | Slightly larger because it bundles the inference engine, but still modest (especially with GGUF quantisation). |

3. Performance & Flexibility #

| Aspect | OpenWebUI | LM Studio |

|---|---|---|

| Model Size Support | Whatever your backend supports – from 7 B to 70 B+ (if you have the hardware). | Supports up to ~70 B with llama.cpp GGUF quantisation; larger models need a GPU‑accelerated backend (e.g., torch/vLLM). |

| Quantisation | Handled by the backend (e.g., Ollama’s quantize command). | LM Studio can auto‑quantise to q4_0, q5_0, q8_0, etc., directly from the UI. |

| GPU vs CPU | Depends on the backend you plug in. You can swap from CPU‑only to CUDA, ROCm, or even TPUs without touching OpenWebUI. | LM Studio can run on CPU (via llama.cpp) or GPU (via torch/vLLM). Switching requires a restart with a different runtime option. |

| Latency | Typically lower if you pair with a high‑performance server (e.g., vLLM on a GPU). | Good for modest hardware; GGUF on CPU can be surprisingly fast (30‑50 tok/s on a modern laptop). |

4. Ease of Use #

| Factor | OpenWebUI | LM Studio |

|---|---|---|

| Setup Time | Docker compose + a running inference server → ~10 min if you already have Ollama/vLLM. | Download binary → run → click “Add Model” → done (≈5 min). |

| Learning Curve | You need to understand how to run an inference server and expose it to the UI. | Mostly point‑and‑click; the UI guides you through model download and quantisation. |

| Documentation | Good docs for Docker, API, and integration with many backends. | Very beginner‑friendly docs, plus in‑app tooltips. |

| Community & Plugins | Growing ecosystem (plugins for RAG, PDF upload, code‑interpreter, etc.). | Smaller plugin ecosystem; mainly focused on model management. |

5. When to Choose OpenWebUI #

- You already have a model server (Ollama, vLLM, Text Generation Inference, etc.) and just need a nice UI / API layer.

- Multi‑user access is required – e.g., a small team, classroom, or public demo.

- You want full control over the inference stack (GPU drivers, quantisation method, custom kernels).

- You plan to expose the service over a network (LAN, VPN, or internet) and need authentication / rate‑limiting.

- You like the Docker‑first approach for reproducibility.

Typical stack example

# 1️⃣ Run Ollama (or vLLM) with your model

docker run -d -p 11434:11434 ollama/ollama

# 2️⃣ Run OpenWebUI

docker run -d -p 3000:8080 \

-e OLLAMA_BASE_URL=http://host.docker.internal:11434 \

ghcr.io/open-webui/open-webui:latest

Now you have a web UI at http://localhost:3000 and an OpenAI‑compatible endpoint at http://localhost:3000/v1.

6. When to Choose LM Studio #

- You want a single‑click, all‑in‑one desktop experience without juggling containers.

- You are experimenting with many models and want the UI to handle downloads, conversions, and quantisation automatically.

- You have limited hardware (e.g., a laptop) and need the most efficient CPU‑only inference (

ggufquantisation). - You prefer a native GUI for prompt engineering, chat history, and quick testing.

- You don’t need multi‑user access or external API exposure (or you’re fine with the local API only).

Typical workflow

- Open LM Studio → “Add Model” → search Hugging Face → pick a model.

- Choose quantisation level (e.g.,

Q4_K_M). - Click Download & Load → the model appears in the sidebar.

- Start chatting, export logs, or call the local API (

http://127.0.0.1:1234/v1/chat/completions).

7. Quick Decision Matrix #

| Need | Best Fit |

|---|---|

| Already running a GPU‑accelerated inference server (Ollama, vLLM, etc.) | OpenWebUI |

| Want a web UI that can be accessed by multiple people | OpenWebUI |

| Need a simple, single‑machine desktop app with auto‑download & quantisation | LM Studio |

| Want to experiment with many models quickly on a CPU‑only laptop | LM Studio |

| Need fine‑grained control over backend (custom kernels, TPUs, custom Docker images) | OpenWebUI |

| Prefer not to touch Docker/CLI at all | LM Studio |

8. Other Alternatives Worth Mentioning #

| Tool | Highlights |

|---|---|

| Ollama | Very easy to spin up models (ollama run mistral). Works great as the backend for OpenWebUI. |

| vLLM | High‑throughput GPU server; ideal for serving many concurrent requests. |

| Text Generation Inference (TGI) | Hugging Face’s production‑grade inference server; works with OpenWebUI. |

| AutoGPT‑WebUI | Similar to OpenWebUI but focused on AutoGPT‑style agents. |

| ComfyUI‑LLM | If you’re already using ComfyUI for diffusion, this adds LLM chat. |

| FastChat | Open‑source chat server + UI; more research‑oriented. |